Context-aware agentics for cross-functional teams: stop the confusion before it becomes a hallucination

Why I keep coming back to this

When we build agentic systems inside real companies, the technical work is rarely the hardest part. The hard part is language.

Every function develops its own shorthand over time. Legal, finance, operations, development, each group has a way of speaking that is tightly linked to the information they work with every day. That is efficient for them, but it becomes a trap the moment we cross boundaries.

And we do not notice it because humans compensate naturally.

In a meeting, if I realise someone is a lawyer, I adjust my words. If I realise someone is finance, I get more precise about definitions. If I am in a board context, I shift to strategic framing. That adaptation is a social skill we have learned through experience.

Your agents do not have that skill unless you design for it.

The most common failure mode: the question makes sense to you, not to the system

Here is the pattern I see repeatedly.

A person asks a question in the way their function expects to ask it. The meaning is obvious to them, because their context fills in the gaps. But to an agent, those gaps look like instructions to invent something plausible.

A simple example: you ask, "for year 21, 22, what was margin?"

To a finance person, that might mean a specific financial year definition that is well understood internally. To someone else, it could mean calendar year. Or it could mean a company year that starts in a different month. Each one changes the answer.

If the agent guesses, you get a confident answer that feels authoritative, but is built on an assumption you never agreed.

That is how hallucination shows up in day to day work.

Source: https://www.tcg.com/blog/ai-hallucinates-when-you-ask-the-wrong-question/

"An LLM hallucinates when it believes that’s what the prompt is asking for. The model defaults to a mode of creative plausibility unless you explicitly instruct it to operate under the constraints of verifiable fact."

A practical reframe: hallucination is often missing context, not bad intent

This is why I do not treat hallucinations as an abstract AI issue. In cross functional environments, hallucination is often a symptom of misaligned definitions.

Source: https://documentation.suse.com/suse-ai/1.0/html/AI-preventing-hallucinations/index.html

"Vague queries can lead to random or inaccurate answers. Lack of clear context. When the language model lacks context, it can fabricate answers."

So rather than demanding everyone become bilingual in every department (which never works), we teach the agent to behave like a good colleague.

A good colleague does three things before answering:

- they pause

- they ask what you mean

- they confirm the expected output

That is the behaviour we want to engineer.

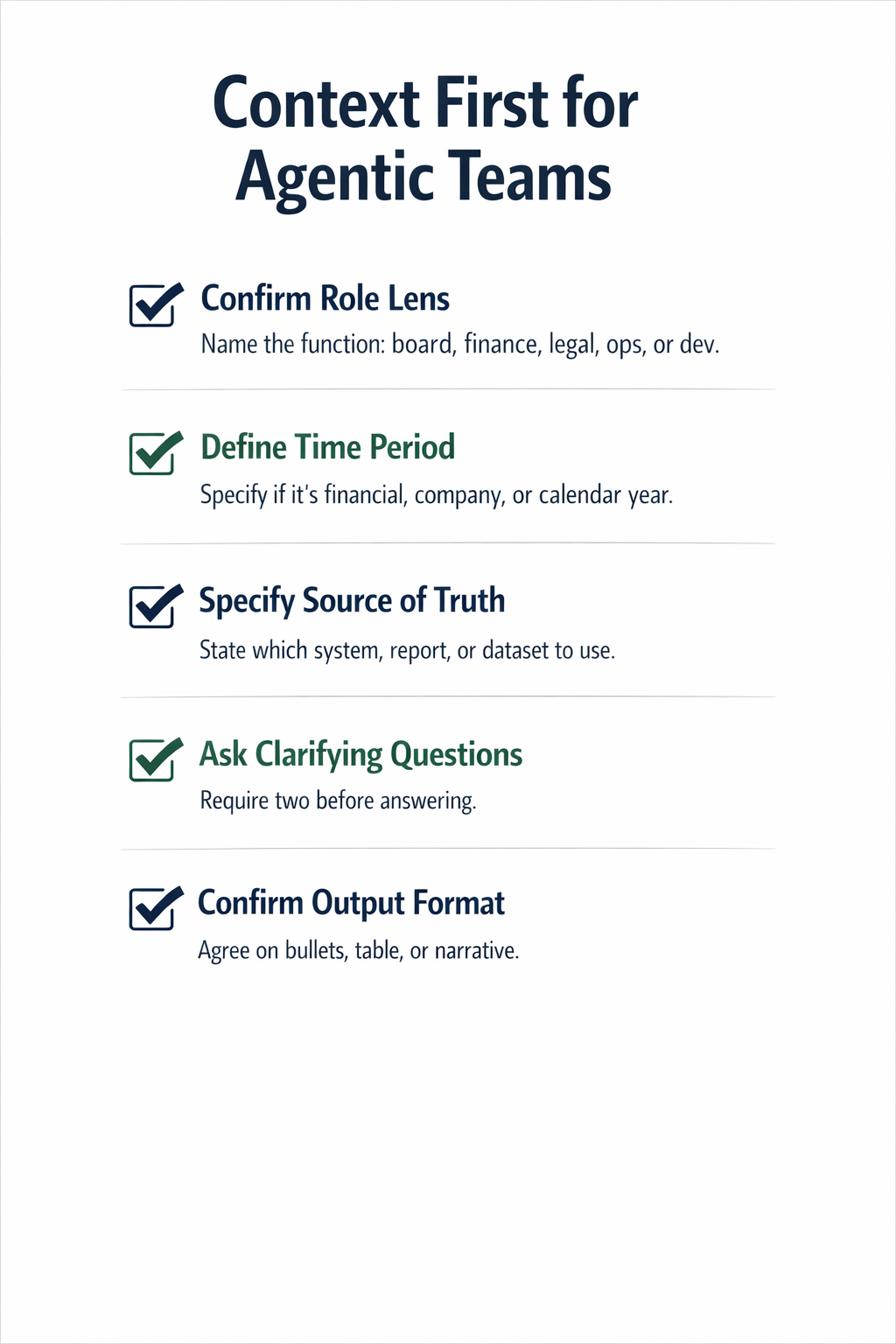

The Context First checklist (what to bake into your agent and your prompts)

If you only take one thing from this post, take this checklist. It is deliberately simple, because simple is easier to operationalise.

Step 1: Identify the role lens

Make the agent ask, or infer safely from the session profile, the lens the user is operating in.

Examples of lenses:

- Board or leadership (strategic, directional, risk and trade offs)

- Finance (definitions, periods, reconciliations, auditability)

- Legal (obligations, wording precision, jurisdiction)

- Operations (process reality, constraints, handovers)

- Development (systems, interfaces, failure modes, logs)

Prompt pattern you can copy:

- "Which lens should I answer from (board, finance, legal, ops, dev), and who is the audience for the output?"

If you are capturing role in a user profile, be explicit about privacy. Only store what you need, store it securely, and tell people what is being stored and why.

Step 2: Define the ambiguous terms (before numbers)

This is where most cross functional errors hide.

Common ambiguous terms:

- "year 21, 22" (financial year, calendar year, company year)

- "customer" (paying account, active user, contract, household)

- "margin" (gross margin, contribution margin, net margin)

- "risk" (delivery risk, compliance risk, financial risk)

- "ready" (spec complete, code complete, deployable, signed off)

Prompt pattern you can copy:

- "Before I answer, can you confirm what you mean by

year 21, 22(financial year, company year, or calendar year), and which definition of margin you want?"

Step 3: Specify the source of truth and the boundary

Agents can write fluent text without being grounded in your actual systems. That is where trouble starts.

You want the agent to ask:

- Which system is authoritative for this question?

- What is the entity boundary (which business unit, region, product line)?

- What is the data freshness requirement (yesterday, month end, last audited close)?

Prompt pattern you can copy:

- "Which system should I treat as the source of truth for this answer, and what is the scope (entity, region, product, time period)?"

If the agent cannot access the source of truth, it should say so, and ask you how to proceed. That transparency is a feature, not a failure.

Step 4: Ask clarifying questions before answering (a fixed minimum)

This is a guardrail I like because it is measurable.

For cross functional questions, require the agent to ask a minimum of two clarification questions before giving an answer, unless the question is already fully specified.

My default two are:

1) role lens and audience

2) time period and definition confirmation

You can tune this by function. Legal might need jurisdiction. Finance might need consolidation scope.

Step 5: Confirm the output format expected

This is where you stop an agent delivering a developer style answer to a board question, or a board style answer to a finance reconciliation.

Confirm:

- length (one paragraph, one page, detailed)

- structure (bullets, table, narrative)

- level (strategic summary vs operational steps)

- confidence and uncertainty (what is known, what is assumed, what is missing)

Source: https://documentation.suse.com/suse-ai/1.0/html/AI-preventing-hallucinations/index.html

"The clearer the prompt, the less the LLM relies on assumptions or creativity. A well-defined prompt guides the model toward specific information, reducing the likelihood of hallucinations."

A small experiment you can run this week (and how to measure it)

If you want to make this real, do a small test with one recurring cross functional question, ideally one that includes dates, periods, or definitions.

Run two versions:

Version A: Answer immediately

Let the agent respond without clarifiers.

Version B: Context First

Force the agent to ask the two clarifying questions (lens, time period definition) before it answers.

Measure three things for a week:

- Correction rate: how often someone has to fix the answer or restate the question

- Confidence: how confident the team feels using the output (a simple 1 to 5 score works)

- Cycle time: time from question to usable answer

You might find Version B takes slightly longer per interaction. The point is whether it reduces rework and increases trust across the team.

What I want you to remember

Cross functional language is not noise, it is signal. It tells you what people care about, and which definitions they are assuming.

If you do not capture that context, the agent will fill the gaps. Sometimes it will guess correctly. Sometimes it will guess confidently and be wrong.

Design for the pause. Design for the clarification. Design for explicit definitions and data boundaries.

That is how you build agents that behave like good colleagues.

Links

- https://openai.com/index/why-language-models-hallucinate/

- https://www.tcg.com/blog/ai-hallucinates-when-you-ask-the-wrong-question/

- https://documentation.suse.com/suse-ai/1.0/html/AI-preventing-hallucinations/index.html

- https://galileo.ai/blog/mastering-rag-llm-prompting-techniques-for-reducing-hallucinations

- https://botscrew.com/blog/guide-to-fixing-ai-hallucinations/

Quotes

Source: https://www.tcg.com/blog/ai-hallucinates-when-you-ask-the-wrong-question/

"An LLM hallucinates when it believes that’s what the prompt is asking for. The model defaults to a mode of creative plausibility unless you explicitly instruct it to operate under the constraints of verifiable fact."

Robert Buccigrossi, AI Hallucinates When You Ask The Wrong Question, TCG, 2026-01-04

Source: https://documentation.suse.com/suse-ai/1.0/html/AI-preventing-hallucinations/index.html

"Vague queries can lead to random or inaccurate answers. Lack of clear context. When the language model lacks context, it can fabricate answers."

SUSE LLC and contributors, Preventing AI Hallucinations with Effective User Prompts, SUSE AI 1.0, 2025-12-18

Source: https://documentation.suse.com/suse-ai/1.0/html/AI-preventing-hallucinations/index.html

"The clearer the prompt, the less the LLM relies on assumptions or creativity. A well-defined prompt guides the model toward specific information, reducing the likelihood of hallucinations."

SUSE LLC and contributors, Preventing AI Hallucinations with Effective User Prompts, SUSE AI 1.0, 2025-12-18