Why I Am Writing A Thank You Note To The AI Universe

If you lead anything in 2025, AI is now in every board pack, strategy away day, and nervous investor question.

I am writing this as a thank you note, not a product pitch.

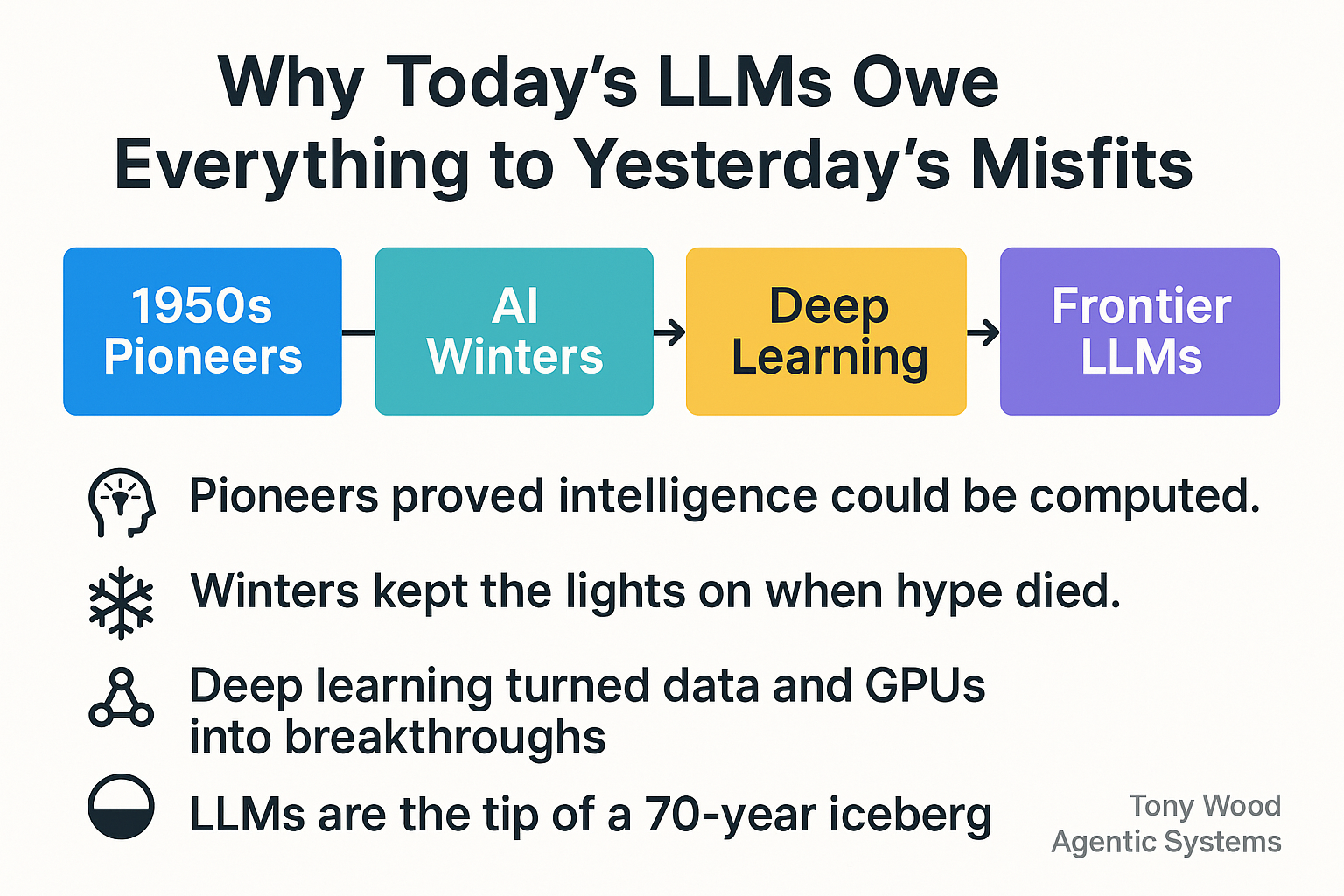

A thank you to the long, messy relay of people and ideas that took us from room‑sized calculators to systems that can draft policy, generate code, and simulate whole environments.

And a reminder that the magic is not in the model names. It sits in the choices leaders make about how these tools shape work, value, and power.

Thank You To The Early AI Pioneers

Modern AI did not begin with chatbots, it began with a handful of people asking strange questions at a time when computers filled rooms.

As one historical account puts it:

"While this groundwork was being laid, the earliest computers, including ENIAC and UNIVAC, emerged in the 1940s and early '50s. In 1950, Alan Turing asked the question, 'Can machines think?' and introduced the Turing Test as a way of measuring machine intelligence. In 1956, John McCarthy coined the term 'artificial intelligence' at the Dartmouth Summer Research Project, widely considered the birth of the field."

Those were leadership acts.

They were not optimising quarterly performance. They were betting their careers on an idea that intelligence could be expressed in circuits and symbols.

For leaders today, this is a useful lens.

The breakthroughs you will thank yourself for in ten years will not look obvious at the time. They will look like Turing’s question did in 1950, a little awkward, slightly out of place, but stubbornly important.

The practical question: where in your portfolio are you making space for those awkward but essential AI questions?

Thank You To The People Who Survived The AI Winters

We talk a lot about breakthroughs and almost nothing about the years when nothing seemed to work.

There were long stretches when AI looked like a bad bet. As one recent history summarises it:

"Funding for AI dried up. The period between the late 1970s and the early 1990s is often referred to as the 'AI winter.' Researchers, now with limited resources, continued to work quietly, producing incremental but essential improvements that paved the way for the field’s resurgence decades later."

Quiet, incremental work is not glamorous.

Yet that is where most of the compound interest lives.

The optimisation tricks, the better algorithms, the obscure conference papers that today’s large language models rely on, all came from people who kept going when the spotlight had moved on.

If you lead a business now, you probably have your own winters. Projects that feel stalled. Teams doing invisible plumbing.

The leadership move is to see that work clearly, protect it where it matters, and be explicit about why it counts. Without those winters, there is no summer.

Thank You To The Sci‑Fi Writers And Speculative Thinkers

Before we had models, we had metaphors.

Long before most people had seen a computer, let alone an LLM, science fiction was doing the hard work of giving society language for what AI might mean.

Policy analysts today are blunt about this link:

"Science fiction is often the earliest ‘policy lab’ for issues presented by emerging technologies—shaping cultural perceptions, ethical debates, and, increasingly, governmental attention to AI. From Isaac Asimov’s ‘Three Laws of Robotics’ to countless more recent works, fiction has supplied the metaphors and mental models policymakers use to frame both hope and risk."

Those stories made it possible to ask sensible questions about robots, autonomy, and control decades before the technology existed.

For leaders, this is a nudge to take imagination seriously.

The stories your organisation tells about AI, internally and externally, will shape how your people use it. If the only narrative is cost cutting, you will get narrow, fearful adoption. If the narrative is thoughtful augmentation, you give teams permission to experiment, to raise ethical concerns, and to look for shared upside.

Your comms, your town halls, your strategy decks are your own kind of sci‑fi. Use them wisely.

Thank You To Deep Learning And The Generative Wave

Fast forward to the 2010s and the story shifts from imagination to scale.

Cheap compute, oceans of data, and breakthroughs in deep learning meant that pattern recognition went from clunky to startling. Vision systems outperformed humans on benchmarks. Speech systems became usable. Then came transformers, which turned sequence prediction into something that could read and write like us.

From a leadership angle, the key point is that this wave made AI feel like infrastructure rather than novelty.

You no longer need a research lab to benefit. You need clarity on where prediction and generation actually create value in your organisation, and you need the courage to start small pilots instead of waiting for perfect certainty.

The executives who bank the most value are not the ones with the fanciest roadmap. They are the ones who paired curiosity with disciplined experimentation while everyone else was still writing position papers.

Thank You To The Frontier LLM Zoo

By 2025, the landscape feels surreal.

There is a thriving “zoo” of large language models and multimodal systems, each with its own name, shape, and personality.

One live snapshot describes it like this:

"Some of the most popular large language models in 2025 include: OpenAI’s GPT-5, Anthropic’s Claude 3.7, Google Gemini models, Meta Llama 3, Alibaba Qwen 2.5 and DeepSeek R1. These LLMs power a variety of applications, from chatbots and coding assistants to content generation and research. The rapid release cadence and playful codenames have created a true ‘zoo’ of frontier models."

For leadership teams, this zoo can feel overwhelming.

The instinct is to ask “which model is best” and stop there.

A more useful question is “which workflows in our world are most ready to be co‑run with these systems” and “what guardrails do we need before we scale them”.

The models will keep changing. Your responsibility is to build a way of working that can change with them: vendor‑agnostic where possible, experiment‑driven, grounded in real process metrics rather than slide‑deck promises.

Treat the zoo as a set of tools you audition, not a religion you sign up to.

Thank You To The People Building Generative Models And World Models

Under the headlines, another quiet shift is underway.

Generative models are learning not just to autocomplete, but to plan, act, and simulate.

World models try to give these systems an internal sense of their environment, so they can rehearse actions in a “dream world” before touching real customers, robots, or markets. In 2025, leading research workshops are full of work on how to represent complex environments, how to keep these models grounded, and how to combine them with agents that can pursue goals safely.

For leaders, this matters because it points to the next frontier of automation.

Today’s productivity gains come from “copilots” that help humans write and reason. Tomorrow’s may come from AI agents that can run longer, more complex tasks with less hand‑holding.

If you are not already mapping which of your processes could be safely trialled with agentic workflows, now is the time. Start with bounded, observable systems, like internal analytics or back‑office operations, and pair every experiment with clear monitoring and an off‑switch.

Thank You To The Ecosystem Around The Models

None of this happens in isolation.

There are platform and infra teams keeping clusters healthy. Open source communities reproducing results and holding big labs to account. Regulators and standard‑setters trying, imperfectly but earnestly, to keep risk attached to responsibility.

One major AI report captures this ecosystem view in simple terms:

"Responsible AI requires strong governance and an ongoing commitment to identify risks, improve model safety, and advocate for fair outcomes. No technology is built in isolation—standards, open source contributions, and transparent reporting are all crucial to advancing AI that benefits everyone."

Translate that into the boardroom and you get a clear leadership agenda:

- Governance is not optional. Someone on your team must own AI risk the way someone owns financial risk and cyber risk.

- Transparency is strategic. Documenting how you use AI will soon be as expected as publishing accounts.

- Ecosystem thinking is a moat. The allies you build now in standards bodies, research networks, and open communities will shape what is possible later.

If your AI plan starts and ends with a single vendor contract, it is not a plan, it is a dependency.

Gratitude And Responsibility: Prosperity Is Not Guaranteed

It is easy to be grateful for the productivity gains, the clever demos, the novelty.

The harder work is to be grateful and clear‑eyed at the same time.

The most honest assessments of AI progress all circle the same point: the upside is real, but who benefits is a choice, not a law of nature.

As a leader, that means three practical responsibilities.

First, skills and literacy. You cannot outsource understanding. Your teams need time and support to learn how these systems behave, where they fail, and how to supervise them.

Second, guardrails and incentives. If every AI deployment in your organisation is measured only on short‑term savings, you will drift towards brittle automation and reputational risk. Build in metrics for safety, fairness, and customer experience, and make them visible.

Third, distribution. Use AI to widen access to knowledge and opportunity inside your organisation, not narrow it. The biggest wins often come when you empower the people closest to the work with the best tools, instead of gating them behind a small central team.

Gratitude without responsibility is sentiment. Gratitude with responsibility is strategy.

Closing Reflection: What You Choose To Build Next

If you zoom out, the pattern is simple.

Founders of a field who asked strange questions when computers were new.

Researchers who kept publishing through AI winters.

Writers who gave us the language to talk about robots and ethics before we had either.

Engineers and scientists who turned deep learning and transformers into the generative wave.

An entire ecosystem of model builders, infra teams, open‑source contributors, policymakers, and safety researchers doing the slow work that makes fast progress possible.

They handed you an environment in 2025 where your organisation can use AI meaningfully without building everything from scratch.

What you do with that gift is the leadership test.

You can chase short‑term gains and hope for the best.

Or you can treat this moment as a responsibility: to experiment with intent, to share the benefits, and to build systems that your future self, your teams, and your regulators will still be grateful for.

Call to Action: Before the week is out, take one focused hour with your leadership team to draft your own “AI gratitude and responsibility” list. Name the people and ideas you are standing on, and agree one concrete decision that will make your organisation’s use of AI safer, fairer, and more ambitious in the next 90 days.

Links:

- The History of Artificial Intelligence, https://www.ibm.com/think/topics/history-of-artificial-intelligence, Trust rating: high, Reason: Used for factual grounding on Turing, Dartmouth, early AI approaches and AI winters to support the long‑arc history sections, Date written: 2024-03-12

- Science Fiction as the Blueprint: Informing Policy in the Age of AI and Emerging Tech, https://www.orfonline.org/research/science-fiction-as-the-blueprint-informing-policy-in-the-age-of-ai-and-emerging-tech, Trust rating: high, Reason: Used to support the role of science fiction in shaping cultural and policy debates around AI, Date written: 2023-10-20

- Best 44 Large Language Models (LLMs) in 2025, https://explodingtopics.com/blog/list-of-llms, Trust rating: high, Reason: Used to illustrate the 2025 “zoo” of frontier LLMs and their applications, Date written: 2025-05-30

- World Models: Understanding, Modelling and Scaling – ICLR 2025, https://iclr.cc/virtual/2025/workshop/24000, Trust rating: high, Reason: Used to underpin the description of current research focus on world models and agentic planning, Date written: 2025-05-10

- Responsible AI: Our 2024 report and ongoing work, https://blog.google/technology/ai/responsible-ai-2024-report-ongoing-work/, Trust rating: high, Reason: Used to support the argument about governance, standards, and ecosystem responsibility in modern AI, Date written: 2024-06-25

Quotes:

- "While this groundwork was being laid, the earliest computers, including ENIAC and UNIVAC, emerged in the 1940s and early '50s. In 1950, Alan Turing asked the question, 'Can machines think?' and introduced the Turing Test as a way of measuring machine intelligence. In 1956, John McCarthy coined the term 'artificial intelligence' at the Dartmouth Summer Research Project, widely considered the birth of the field.", https://www.ibm.com/think/topics/history-of-artificial-intelligence, Trust rating: high, Reason: Anchors the early AI pioneers section with a concise historical summary, Date written: 2024-03-12

- "Funding for AI dried up. The period between the late 1970s and the early 1990s is often referred to as the 'AI winter.' Researchers, now with limited resources, continued to work quietly, producing incremental but essential improvements that paved the way for the field’s resurgence decades later.", https://www.ibm.com/think/topics/history-of-artificial-intelligence, Trust rating: high, Reason: Grounds the AI winters section in a clear description of the period and its importance, Date written: 2024-03-12

- "Science fiction is often the earliest ‘policy lab’ for issues presented by emerging technologies—shaping cultural perceptions, ethical debates, and, increasingly, governmental attention to AI. From Isaac Asimov’s ‘Three Laws of Robotics’ to countless more recent works, fiction has supplied the metaphors and mental models policymakers use to frame both hope and risk.", https://www.orfonline.org/research/science-fiction-as-the-blueprint-informing-policy-in-the-age-of-ai-and-emerging-tech, Trust rating: high, Reason: Supports the argument that sci‑fi has been central to AI imagination, ethics, and policy framing, Date written: 2023-10-20

- "Some of the most popular large language models in 2025 include: OpenAI’s GPT-5, Anthropic’s Claude 3.7, Google Gemini models, Meta Llama 3, Alibaba Qwen 2.5 and DeepSeek R1. These LLMs power a variety of applications, from chatbots and coding assistants to content generation and research. The rapid release cadence and playful codenames have created a true ‘zoo’ of frontier models.", https://explodingtopics.com/blog/list-of-llms, Trust rating: high, Reason: Provides up‑to‑date examples for the frontier LLM “zoo” section, Date written: 2025-05-30

- "Responsible AI requires strong governance and an ongoing commitment to identify risks, improve model safety, and advocate for fair outcomes. No technology is built in isolation—standards, open source contributions, and transparent reporting are all crucial to advancing AI that benefits everyone.", https://blog.google/technology/ai/responsible-ai-2024-report-ongoing-work/, Trust rating: high, Reason: Underpins the ecosystem and governance discussion in the responsibility section, Date written: 2024-06-25