Stop Trusting AI Like A Calculator: What Deloitte’s Government Slip-Ups Should Teach Every Leader

I have been watching the recent Deloitte stories unfold, where AI-generated content and fabricated citations ended up in government reports, and I keep coming back to the same thought: this is not really about one firm, it is about how all of us are thinking about AI at work.

For thirty years we have trained ourselves to trust systems. You put data in, something processed it in the background, and you trusted the result. In most cases that trust was earned, because those systems were deterministic, slow to change, and wrapped in governance.

AI is different, and the Deloitte cases are a brutal reminder of what happens when we forget that.

Why Am I Writing This Blog Post

From what is publicly reported, Deloitte used AI to help produce material for government clients, and fabricated research and citations slipped through into final reports.

One summary captures the Canadian situation starkly:

"Deloitte submitted a report to the Canadian government that cited AI-generated, fabricated research, igniting public backlash and calls for greater transparency and validation of outputs from consultancies and tech vendors."

In Australia, the pattern is similar, this time with money going back on the table:

"Deloitte has agreed to repay the Albanese government after admitting it relied on artificial intelligence to generate parts of a $440,000 report that included erroneous and fabricated sources, triggering increased scrutiny on consulting firms’ AI quality control and oversight."

I am not interested in throwing rocks at Deloitte. I am interested in the mental model that means smart teams, with robust processes on paper, still let this happen.

Because if they can slip, so can you and I.

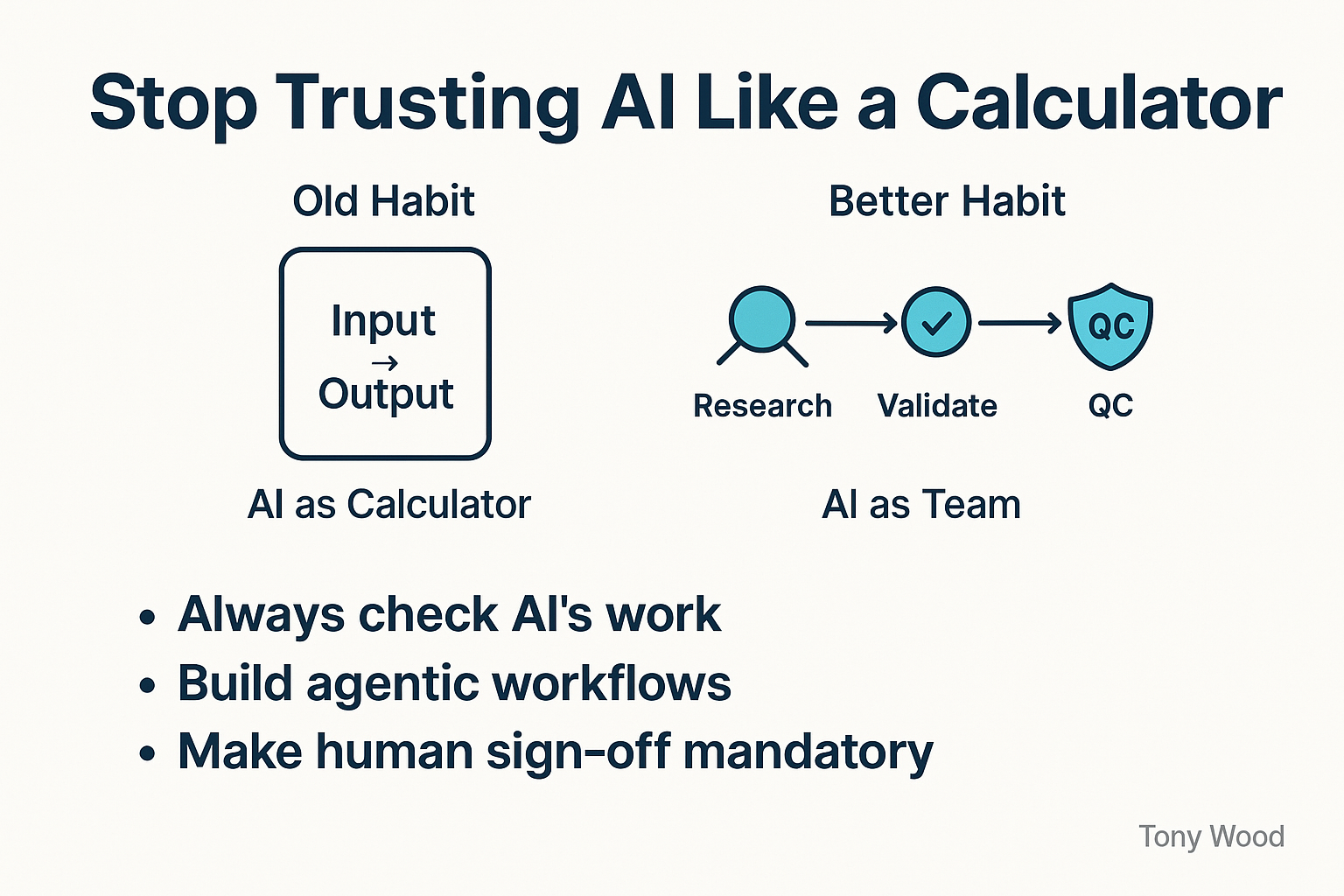

The Trap Of Trusting Old Systems

Most leaders in senior roles today grew up with systems that behaved like calculators.

You ran a query on the finance system, you trusted the numbers.

You pulled a report from the CRM, you assumed it reflected the database.

Those instincts were not foolish. They were built on top of software that was engineered to be consistent, with clear rules and clear failure modes. When something was wrong, it was usually obviously broken.

The side effect is that we trained a whole generation to see system output as “the answer”. Not a suggestion, not a draft, but truth.

With AI, that habit becomes dangerous.

Why AI Is Different: Variable Output, Variable Input

AI models, especially the large language models sitting behind agents and chatbots, are probabilistic.

The same input can give you a different answer tomorrow.

Two people asking the same question can get two different answers at the same time.

That is not a bug, it is the feature that makes AI flexible and creative. It can synthesise, reframe and rephrase on the fly. It lets you explore options and scenarios.

The flip side is obvious: if the input varies and the internal reasoning is probabilistic, the output will vary too. Sometimes it will be spot on. Sometimes it will be plausible nonsense.

Even the people building this technology are very clear about that risk:

"Generative AI systems have a tendency to 'hallucinate,' creating content that appears plausible but is wholly fabricated. This is exacerbated when organizations place outright trust in AI-generated outputs without effective validation procedures, highlighting a new imperative for leaders: treat AI as a tool whose work needs to be checked, not as a source of truth."

If you treat AI like the old finance system, you will get hurt.

Treat AI Like A Team, Not A Machine

Here is the mindset shift that I have seen work best.

Do not think of AI as a magic box that produces answers.

Think of it as a group of junior team members, each with a specific job. Fast, tireless, surprisingly capable, but still needing structure, supervision and sign-off.

You would never:

- Let a graduate draft a major government report and send it unedited.

- Ask one analyst to do research, analysis, writing and quality control for a £500,000 engagement, with no review.

- Allow a contractor to invent citations and slide them into a board paper unchecked.

Yet, when we hand the same scope to a single AI prompt, that is effectively what we are doing.

The right question for leaders is not “Can the AI do this task?” but “What role should AI play in this team, and who is accountable for checking its work?”

Designing An Agentic Workflow: Build An AI Crew, Not A Single Magic Box

Once you treat AI as a team, you can design an agentic workflow that mirrors how strong organisations already operate.

Think of a simple five-agent model for content or research work:

-

Research Agent

- Scans sources.

- Summarises what is out there.

- Provides a starting pack of material and perspectives.

-

Validation Agent

- Cross-checks citations and facts.

- Flags gaps and contradictions.

- Looks specifically for hallucinations and weak evidence.

-

Content Agent

- Drafts the narrative or report.

- Structures the argument for the intended audience.

- Adapts tone and length.

-

Quality Assurance Agent

- Checks clarity, coherence and alignment with the brief.

- Tests whether claims are supported by the references.

- Looks for internal inconsistencies.

-

Compliance / Policy Agent

- Reviews for regulatory, ethical and brand risks.

- Applies internal standards, templates and legal constraints.

- Escalates anything that needs human legal review.

Each of these agents is an AI worker, but each handoff has a named human who owns the outcome.

For leadership teams, the pattern to copy is simple:

- Break big AI tasks into roles that match how your people work today.

- Assign each role to an “AI agent” plus a human owner.

- Decide which steps are mandatory for high-risk work, such as government reports or board papers.

- Put a human sign-off step at the end, with clear accountability.

- Log sources and checks, so that if something does go wrong, you can see which control failed.

This is not about adding red tape. It is about making sure AI behaves like a junior colleague inside a familiar workflow, rather than a rogue operator.

What The Deloitte Cases Are Teaching Us

When you read across the public reporting from Canada, Australia and the Newfoundland and Labrador case, a common theme emerges.

AI-generated content and fabricated citations were allowed to flow straight through into client-facing reports without robust validation. Governments were left dealing with the backlash.

One trade summary describing the provincial case puts it bluntly:

"The Newfoundland and Labrador government is grappling with fallout after it was found Deloitte’s report was marred by false citations generated by artificial intelligence, raising questions about proper oversight and the need for rigorous quality control in vendor AI deliverables."

The details differ by country and contract, but the core lesson is consistent:

- If AI research is not separated from AI drafting, hallucinations are more likely to slip through.

- If validation is not a distinct step, nobody “owns” catching fabricated sources.

- If QA and compliance are not formal roles, people assume “the system” must have it covered.

- If leadership does not set expectations, teams fall back to the calculator mindset.

These are not exotic AI problems. They are governance problems that happen to involve AI.

Practical Steps For Leaders: How To Stop AI From Embarrassing Your Brand

If you sit on a board, executive committee or lead a major function, you do not need to understand neural networks. You do need a simple, actionable playbook.

Here is a starter list you can apply this quarter:

-

Ban “single-step” AI on high-stakes work.

Any output that goes to a minister, regulator, board, or major client must go through, at minimum, research, validation and human sign-off. -

Insist on AI roles, not “the AI did it”.

For critical workflows, ask your teams: which agent is doing research, which is validating, which is drafting, and who is the human owner at each stage? -

Treat AI outputs as drafts, by policy.

Make it explicit that AI content is never final. It is raw material for your people, not finished product. -

Ask to see the control log.

When reviewing AI-assisted work, ask “Which checks did this go through?” and “What sources back these claims?” Normalise that scrutiny. -

Align vendors to your standards.

When you hire a consultancy or tech partner, ask them to describe their AI workflows and validation steps. If their process is “we check it manually”, push for detail. -

Educate your senior team on hallucination risk.

Use real numbers and examples, not abstract warnings. One business-focused analysis puts the stakes plainly:

You do not need a ten-page AI policy to get started. You need a shared understanding that AI is a junior colleague whose work is always checked.

Closing Reflection: Your Next AI Decision

The uncomfortable truth is that the next Deloitte-style story could feature any logo.

All it takes is one unreviewed AI report, one high-profile client, and one set of fabricated references that nobody had ownership to catch.

The flip side is encouraging.

If you, as a leader, decide that:

- AI is treated like a team, not a calculator.

- Agentic workflows mirror your best human processes.

- Verification is a habit, not an afterthought.

Then AI becomes a powerful accelerator instead of a reputational risk.

So the question I want to leave you with is simple:

Where, in your organisation today, are you still trusting AI like a calculator, and what is one concrete step you will take this month to start running it like a well-governed team?

Links:

-

Deloitte Canada AI citations coverage, high trust, Fortune article on fabricated AI-generated research in a Canadian government report, 2025-11-25.

https://fortune.com/2025/11/25/deloitte-caught-fabricated-ai-generated-research-million-dollar-report-canada-government/ -

Deloitte Australia AI report refund story, high trust, The Guardian coverage of the $440,000 report and repayment to the Albanese government, 2025-10-06.

https://www.theguardian.com/australia-news/2025/oct/06/deloitte-to-pay-money-back-to-albanese-government-after-using-ai-in-440000-report -

Newfoundland and Labrador fallout from AI errors, high trust, HR Reporter summary of provincial response to Deloitte report with false AI-generated citations, 2025-11-27.

https://www.hrreporter.com/focus-areas/automation-ai/ai-errors-province-grapples-with-deloitte-report-marred-by-false-citations/393782 -

Generative AI quality and hallucination risks, high trust, Deloitte Insights research on data integrity and model validation for enterprise AI, 2024-03-14.

https://www.deloitte.com/us/en/insights/topics/digital-transformation/data-integrity-in-ai-engineering.html -

Business impact of AI hallucinations, medium trust, Mint.ai blog explaining the $67.4 billion risk figure and verification practices for marketers, 2024-09-12.

https://www.mint.ai/blog/when-ai-gets-it-wrong-why-marketers-cant-afford-hallucinations

Quotes:

-

Deloitte Canada AI citations coverage, high trust, Nicholas Gordon, "Deloitte submitted a report to the Canadian government that cited AI-generated, fabricated research, igniting public backlash and calls for greater transparency and validation of outputs from consultancies and tech vendors.", 2025-11-25.

https://fortune.com/2025/11/25/deloitte-caught-fabricated-ai-generated-research-million-dollar-report-canada-government/ -

Deloitte Australia AI report refund story, high trust, Paul Karp, "Deloitte has agreed to repay the Albanese government after admitting it relied on artificial intelligence to generate parts of a $440,000 report that included erroneous and fabricated sources, triggering increased scrutiny on consulting firms’ AI quality control and oversight.", 2025-10-06.

https://www.theguardian.com/australia-news/2025/oct/06/deloitte-to-pay-money-back-to-albanese-government-after-using-ai-in-440000-report -

Newfoundland and Labrador fallout from AI errors, high trust, Staff Reporter, "The Newfoundland and Labrador government is grappling with fallout after it was found Deloitte’s report was marred by false citations generated by artificial intelligence, raising questions about proper oversight and the need for rigorous quality control in vendor AI deliverables.", 2025-11-27.

https://www.hrreporter.com/focus-areas/automation-ai/ai-errors-province-grapples-with-deloitte-report-marred-by-false-citations/393782