AI at Work: Training, Not Surveillance – Why Digital Dignity Must Lead the Way

Here’s the question that keeps landing on my desk: How can AI support the people whose jobs feel under threat? I keep hearing from managers and teams worried that AI is coming for roles, not to help but to hover overhead and monitor. I get it. If you introduce a digital system that watches every move, you’re not upskilling anyone – you’re sowing the seeds of distrust.

For me, the real opportunity starts elsewhere. We can and should use AI to help employees build new skills, grow their confidence, and stay relevant as the world changes. But if the rollout crosses into digital surveillance, we’re lost before we start. Let’s talk about how to get this balance right.

The Danger of Letting AI Become a Workplace Spy

Let’s not dress this up. If you bring in AI tools that track every click and conversation, it feels a lot like putting someone on a performance plan and then looming over them to catch them out. That’s not support. That’s a digital shadow creating worry, not value.

Ashmita Shrivastava puts it plainly in her post for Moveworks:

> “As AI becomes embedded in daily work, interactions ranging from PTO requests to software access can involve sensitive employee data. Without clear privacy guardrails, organizations may face compliance challenges, potential security gaps, and erosion of employee trust.”

> (Moveworks Blog)

I’ve seen first-hand how people react when they feel monitored rather than mentored. Morale dips. People hang back, play it safe, and look for the exit. Distrust spreads. Nobody wants to be measured for control’s sake.

The Better Way: AI as Support, Not Punishment

AI should train and support, not spy and punish. That’s the shift we need. If we approach AI as a digital coach – transparent, supportive, and focused on capability rather than compliance – we unlock its real potential for the team.

But it takes design. Boundaries. Clear communication about what’s being tracked, who holds the data, and how it’s used. As Mauricio Foeth and Fisher Phillips note,

> “Employers must ensure that the data collected is used only for the intended purposes and that the rights of employees are respected. Transparency and employee consent are also essential factors to consider when using AI systems.”

> (Fisher Phillips)

That means upskilling data belongs to the employee, not a faceless dashboard. It also means that any move to use AI for performance tracking or HR interventions comes with explicit consent, not hidden monitoring tech.

The Call for a General AI Protection Act (GAIPA)

Just as GDPR enshrined strong rights over what happens to our personal data, we need something similar to protect digital workers: a clear, enforceable framework – let’s call it GAIPA, the General AI Protection Act. GAIPA would:

- Set boundaries on what workplace AI can track or infer

- Require open explanations of what data is used and why

- Put workers in control over their skill profile, learning dashboard, and digital journey

- Ban covert monitoring and make privacy protections non-negotiable

This isn’t theoretical. “Organizations should balance robust data protection with continued AI innovation. The goal? To balance AI-driven momentum with clear, enforceable data boundaries that your workforce can trust and depend on,” says Shrivastava, again highlighting the importance of trust as the core metric (Moveworks Blog).

And if you want to dig deeper into the legal and compliance side, the SHRM resource on AI in the Workplace: Data Protection Issues unpacks many of these risks.

How Managers Can Build Trust and Lead the Change

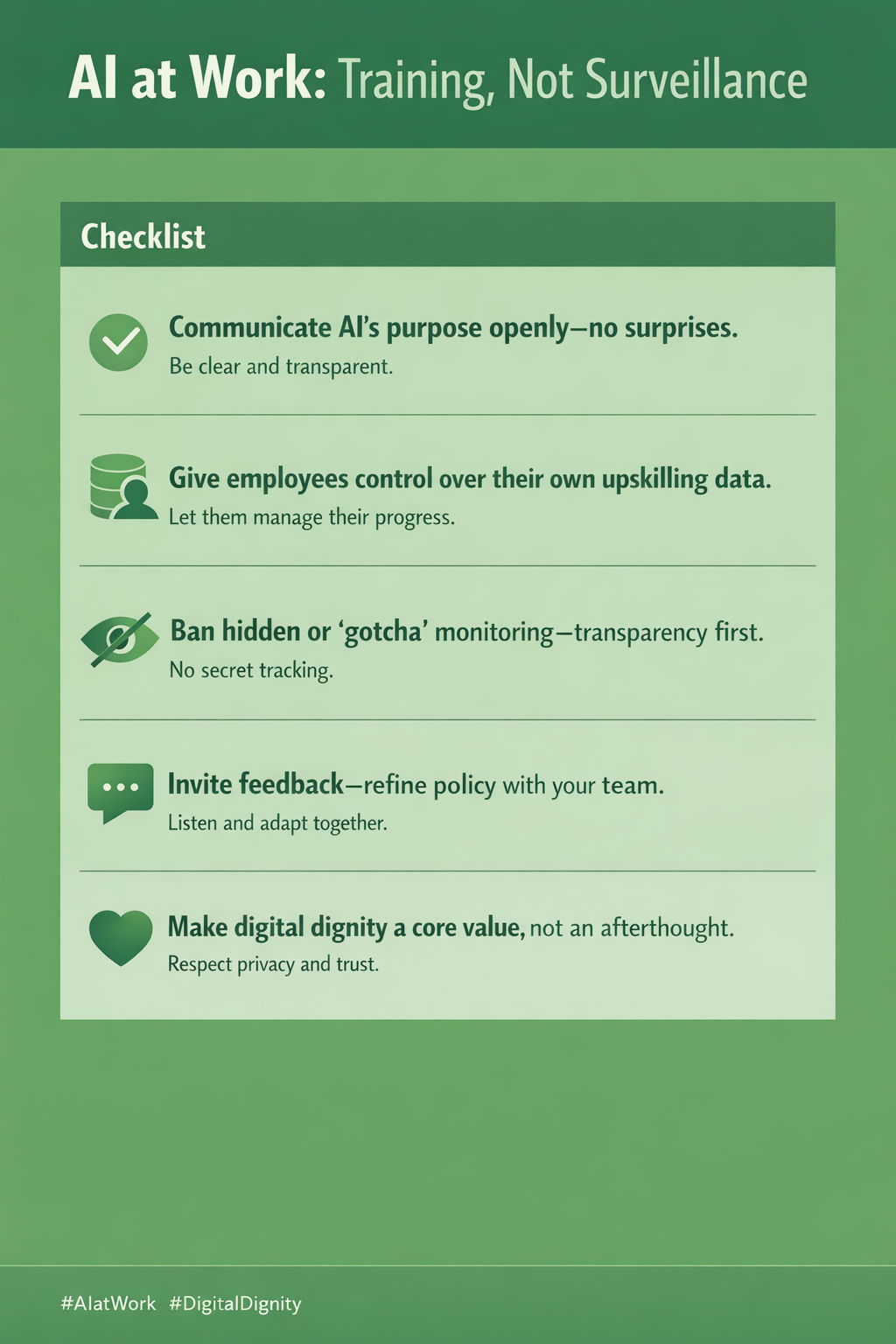

Managers – your people will only truly embrace AI if they see evidence that their growth and privacy matter most. Set the tone by being transparent about how, and why, AI is used. Invite feedback. Let colleagues see that the aim is empowerment, not scrutiny.

If you need a practical checklist, Moveworks’ best practices are worth reading for concrete, compliance-friendly steps.

And always ask this: does this AI tool help my team feel safer, smarter, and more capable? Or does it make them look over their shoulder?

The Way Forward: Dignity, Trust, and a Smarter Workplace

To my mind, if we do this right, we all win. Companies gain a workforce that’s learning and adapting; individuals keep ownership of their data and their professional pathway; and AI becomes the assistant and team-mate it ought to be – never the overseer.

If you’re designing (or buying) workplace AI, start here:

- Set privacy defaults that favour the employee

- Communicate the AI’s role openly and often

- Commit to upskilling and digital dignity, not digital monitoring

And keep this vision in focus: AI should elevate, not eliminate, your workforce.